With the frontend work out of the way, we turn our attention to a new challenge, transforming this funny looking tree structure into something that a processor can understand (using LLVM of course, duh).

Although LLVM does not provide any Rust libraries, it does provide header files to invoke in C/C++. Rust was designed to have extremely powerful FFI functionality (foreign function interface). The standard library has an entire module in it’s standard library dedicated to using Rust to invoke function from different languages. This is enough for us to actually use Rust with LLVM headers, but requires us to create bindings to the API, which can take a lot of work. We can further reduce the work needed to incorporate LLVM by using inkwell, which is a safe Rust wrapper around the bindings, generated automatically with bindgen, that allows us to use LLVM, while upholding the Rust memory model.

Inkwell is a safe wrapper library, where it attempts to uphold the same memory guarantees Rust offers, while allowing users to work with LLVM. Because of this, many things might be different from how one might work with the C/C++ headers. This is where my Rust implementation of Kaleidoscope might differ a bit to the original C++ implementation showcased in the tutorial. Expect to see some function return validated Rust references, enums rather than pointers, C++ polymorphic objects. Expect to see certain functions, methods exist in the wrapper that are not present in the original headers, and vice versa. Also, inkwell is a work in progress, so some API functionality might not even exist yet. Nevertheless, the functionality that is there is more than enough to implement this simple toy language, in addition to being a bit more straightforward, easy-to-use compared to the raw header interface.

Installing LLVM, Setting Up a Cargo Project with Inkwell Crate

For starters, before we even touch on using LLVM APIs, we must first install LLVM on our workstation. Developers have a few choices here, as described :

- Build LLVM from source and install on your local machine. Can be done on both Windows and Linux. Requires a few dependencies, but most likely you have them all already installed. (Git, a recent version of Python3, CMake). Follow steps on llvm.org here.

- Install packages with pre-built binaries. Could be as simple as using the package manager on your flavor of GNU/Linux to download everything you need. For Debian-based systems like Ubuntu, look at https://apt.llvm.org/ for a handy script that will install all necessary packages.

The first option sounds rather intimidating, but the LLVM website has more than enough guidance to help you build everything from source. Do be ready to leave your desk and do something else, since build times for this gargantuan C++ codebase can take 30-45 minutes, even with multiple threads! So idle yourself for a bit with a coffee break, a gaming session, in case you do plan to build from source. If you do decide to install via packages, then you shouldn’t have to wait long at all.

This is not to say that building from source is discouraged. In fact, building from source might be necessary down the road when we need to cross-compile LLVM to help build our implementation for a different platform, but this is a blog post for a different day.

The version of LLVM does matter here. Inkwell supports versions 4-17 as of writing this (I think they might be at 18 by the time I publish!). I will be using LLVM 17.0.6, Inkwell version 0.4.0. Set up your cargo workspace with the inkwell dependency by adding this in to Cargo.toml. Be aware that, in order to compile the inkwell crate listed below, be sure your installation of LLVM is reachable in your PATH.

[dependencies]

inkwell = { version = "0.4.0", features = ["llvm17-0-force-static"] }An Introduction to LLVM Intermediate Representation

The intermediate representation, or IR, of LLVM is the currency of sorts to the entire system. It is not only a vehicle to generate code for the target CPU architecture machine language, but also is passed around to optimization functions, code analysis, and so on. If LLVM is a human body, then the IR is the blood which carries resources and information to all extremities of a compiler in a way that all parts of it understand, communicated via IR.

Because of this vital role, the form that the IR takes has to be low-level enough to communicate backend specific information to code generation and optimization so that it eventually boils down to CPU instructions, while at the same time being high-level enough to be generalized across different CPU architectures and transformable from a variety of source code. All the while being wrapped around an API that will allow compiler engineers to program new optimizations, analyses, and new languages for a particular frontend. A monumental task to be sure, but executed well enough for wannabe compiler engineers like me to use it two decades after its release.

The IR is a standalone language in and of itself, existing in three different forms, including a human-readable text form that looks something akin to a mix of C and assembly. Observe this simple bit of C compiled with the LLVM C compiler, Clang (courtesy of compiler explorer at godbolt.org), with –emit-llvm flag to produce IR.

float circleArea(float radius) {

return ((radius*radius)*3.14);

}define dso_local noundef float @circleArea(float noundef %0) local_unnamed_addr #0 {

%2 = fmul float %0, %0

%3 = fpext float %2 to double

%4 = fmul double %3, 3.140000e+00

%5 = fptrunc double %4 to float

ret float %5

}We have our circleArea function here compiled to the corresponding IR. The IR identifies global and local variables with the “@” and “%” characters respectively. The mathematical expression ((radius*radius)*3.14) was broken down into 6 different lines that each have an LLVM instruction tied to them (fmult, fpext, ret). Multiplication happens piece by piece, similar to how each value would be loaded into registers and multiplied together in assembly.

Unlike assembly, we also see that mnemonics like fmult (floating point multiplication) also return values as if they were functions instead of instructions to CPU. These returned values are stored in local variables named with ascending numbers. These instructions also have strict typing rules like C, so a floating point multiplication instruction needs to accept variables of float, returning a float.

Another thing about the variables is that they are assigned only once as part of a strategy called single static assignment, or SSA. If %x where to be assigned in IR, any change would have to be reflected in a new variable, %x’, %x2 or similar. SSA enables optimization algorithms for a number of different areas, including register allocation strategies, dead code elimination, and much more! SSA is popular enough to be used in a lot of different IR outside of LLVM so its wikipedia entry is work a read at the very least.

There are a plethora of other nuances to IR, including basic blocks, modules, and attributes, that can be further groked from LLVM documentation. Consider this a brief overview. I found it beneficial as well to toy with compiling different bits of C using the online compiler explorer website godbolt.org. Use any clang variation with -S –emit-llvm flags to compile any bit of C code to corresponding IR. Same can be done with the Rust compiler as well with –emit=llvm-ir flag!

Constructing Core LLVM Objects with Inkwell

With both LLVM and inkwell set up, the first thing to do in the code is define some very core LLVM objects that are the foundation to working with the API. As readers could image, there is a lot of different data structures, algorithms, and behind-the-scenes magic that allows IR to be maintained in memory, usable via APIs. These objects are contexts, builders, modules, and are responsible for both encapsulation of that complexity, but also an interface to allow users to, say, build an LLVM instruction, create constants, define a type, create a function, and so on. The tutorial defines these as static variables, along with a map of string to LLVM Value mappings to keep track of what variables are defined and what their values are.

static std::unique_ptr<LLVMContext> TheContext;

static std::unique_ptr<IRBuilder<>> Builder(TheContext);

static std::unique_ptr<Module> TheModule;

static std::map<std::string, Value *> NamedValues;Inkwell is very much the same in this regard. We can construct these in a very similar way. The only thing that has Rust ownership here is the context object, everything else is a reference derived from the context. See docs

The inkwell Github repository even contains a bit of code to help developers get started creating these objects. Looking at the README here, you can find that the sample code creates a struct containing all these different core objects.

struct CodeGen<'ctx> {

context: &'ctx Context,

module: Module<'ctx>,

builder: Builder<'ctx>,

execution_engine: ExecutionEngine<'ctx>,

}We will do something similar here, I will call mine just LLVMContext. We do not need an execution engine at the moment so will skip that. We will also include the NamedValues map here as an internally mutable hashmap of names to LLVM values. We will also add a publicly available constructor here, so we can in invoke it with the owned context.

// backend/llvm_backend.rs

#[derive(Debug)]

pub struct LLVMContext<'ctx> {

context: &'ctx Context,

builder: Builder<'ctx>,

module: Module<'ctx>,

sym_table: RefCell<HashMap<String, AnyValueEnum<'ctx>>>,

}

impl<'ctx> LLVMContext<'ctx> {

pub fn new(context: &'ctx Context) -> Self {

let builder = context.create_builder();

let module = context.create_module("kaleidrs_module");

Self {

context,

builder,

module,

sym_table: RefCell::new(HashMap::new()),

}

}

}

// do all this gruntwork of setting up LLVM internals with just

// let ctx = Context::create();

// let session_context = LLVMContext::new(&context);

// Use builders by just doing

// session_context.builder.build_float_add()

// session_context.module.add_function()My Approach to IR Emission With a Trait

For this part of the blog, will be heavily referencing the docs for inkwell crate, located at https://thedan64.github.io/inkwell/inkwell/index.html.

For the original tutorial, the implementation of the backend and IR generation begins with an addition of a method to the base ExprAST class. The method is called codegen, and really does what it says on the tin. Generates LLVM IR code. It’s a virtual method of the AST base class, overridden by children. This allows for dynamically dispatched IR generation, where one kind of AST node in the tree may generate one kind of IR, say for creating numeric constants, while a function definition node might build a function definition in IR. This method can be called recursively, where a parent, say a function, will call codegen for it’s body.

/// ExprAST - Base class for all expression nodes.

class ExprAST {

public:

virtual ~ExprAST() = default;

virtual Value *codegen() = 0;

};

/// NumberExprAST - Expression class for numeric literals like "1.0".

class NumberExprAST : public ExprAST {

double Val;

public:

NumberExprAST(double Val) : Val(Val) {}

Value *codegen() override;

};These codegen functions return a pointer to a LLVM Value. Which could be a variety of different things, from functions, to floating point values, and everything our language might represent in between. The formal definition for it is anything stored in an SSA register. Value in this case is probably a base virtual class derived from a lot of different LLVM constituents. The wrapper library provides a enum we can use to better categorize all these possible values.

To begin with the transformation of a Kaleidoscope AST into IR, we will first create a trait for our tree nodes. This trait will have a single method to it, codegen, which will accept a reference to a context object we defined above, and and return an LLVMValue of any variety.

// in llvm_backend.rs, this

// Short type alias, IR generation could fail

// AnyValueEnum docs:

// https://thedan64.github.io/inkwell/inkwell/values/enum.AnyValueEnum.html

type IRGenResult<'ir> = Result<AnyValueEnum<'ir>, BackendError>;

// I do not condone needlessly cloning Strings, you really should

// not do this, but for now, live with it

#[derive(Error, PartialEq, Debug)]

pub enum BackendError {

#[error("Unknown variable name {0}")]

UnknownVariable(String),

#[error("Undefined function {0}")]

UndefinedFunction(String),

// whatever else...

}

// More on the lifetimes 'ctx and 'ir later...

pub trait LLVMCodeGen {

fn codegen<'ctx: 'ir, 'ir>(&self, context: &LLVMContext<'ctx>)

-> IRGenResult<'ir>;

}

// Implement the backend for the tree on a per node basis

impl LLVMCodeGen for NumberExpr {/*...*/}

impl LLVMCodeGen for VariableExpr {/*...*/}

// in ast.rs, require anything that implements the AST trait to

// implement the backend trait, LLVMCodegen

pub trait AST: Debug + LLVMCodeGen {}This bit of Rust will help us complete part 3 of this LLVM tutorial. It also comes with a few interesting side effects. For one, it demands that the entire AST, every type of node, implements the LLVMCodeGen trait. AST: Debug + LLVMCodeGen states that anything that implements AST must also implement the backend, which makes sense, as in that’s the point of this tree. The second nature of this snippet is that any dynamically dispatched AST trait object now can call codegen.

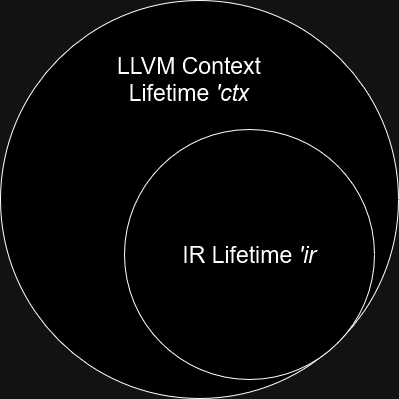

Again, I feel like I have to explain the lifetimes of this codegen method again, sigh… There are two of them this time, ‘ctx and ‘ir. I knew that there would be at least one lifetime involved when working with this API, or so I thought when looking at the inkwell example on the README of their repo. I initially wrote it with just the context lifetime, or ‘ctx. Once I started getting compilers errors on the return values, AnyValueEnum, I noticed that the values produced by builder and context objects did not live as long as their producers. They indeed have their IR, which is distinct but not wholly separate from ‘ctx.

To illustrate this, imagine the lifetimes of these types as eclipsing circles.

The more I thought about it, the more it made sense. Types bound by the context lifetime like the builder will construct IR, but the IR produced may have a shorter lifetime than the builder. LLVM instructions, for example, might be constructed in one breath, then removed later when we run optimizations on the IR to increase speed. Similar story for basic blocks, functions with dead code elimination strategies.

In this sense, the IR lifetime is bounded by the context lifetime <‘ctx: ‘ir>. It is related to the IR insomuch as it can live at least as long as the context, but no longer. Could live shorter. If readers would like to know more about all this nonsense with lifetimes, look at this chapter in the Rustonomicon for details on subtyping and variance with lifetimes.

So, we have a trait, a fallible return type, and a method to implement for that trait. Next is just implementing that trait for the types of AST nodes (NumberExpr, CallExpr, …).

// tutorial implementation

// Value *NumberExprAST::codegen() {

// return ConstantFP::get(*TheContext, APFloat(Val));

// }

// Using context, create a float 64-bit type, then use the type

// to just return a float value as AnyValueEnum.

impl LLVMCodeGen for NumberExpr {

fn codegen<'ctx: 'ir, 'ir>(

&self, context: &LLVMContext<'ctx>

) -> IRGenResult<'ir> {

let float_type = context.context.f64_type();

Ok(float_type.const_float(self.0).as_any_value_enum())

}

}

// tutorial implementation

// Value *VariableExprAST::codegen() {

// Value *V = NamedValues[Name];

// if (!V)

// LogErrorV("Unknown variable name");

// return V;

// }

// For variables, we need to check the variable table, sym_table,

// to look up it's name. Only existing variables should be function

// arguments. If lookup fails propogate error unknown variable.

impl LLVMCodeGen for VariableExpr {

fn codegen<'ctx: 'ir, 'ir>(

&self, context: &LLVMContext<'ctx>

) -> IRGenResult<'ir> {

if let Some(llvm_val) = context.sym_table

.borrow().get(&self.name)

{

Ok(*llvm_val)

} else {

Err(BackendError::UnknownVariable(self.name.clone()))

}

}

}The basic leaf nodes for variables and number constants are quite simple. NumberExpr AST nodes should just generate a LLVM floating point value, return as an AnyEnumValue. This is infallible by nature, so it will never fail. I found it interesting the wrapper lib forced me to use the Context object to first generate a type, and then use that type to grab a floating point constant. The C++ implementation in tutorial used just a single devoted function ConstantFP::get. Perhaps this is because the context in the end holds the space of constants that, in the words of the LLVM tutorial, are all “unique together and shared.”

The variable generation really just a mirror image of the C++ version, a map lookup, where an error is propogated up if you have undefined variables with your scope. The only scope for Kaleidoscope is the function scope.

Referring back to our AST, binary expression nodes, BinaryExpr are not leaves. They have left and right members that are dynamically dispatched trait objects to either, numbers, variables, or yet more binary expressions. We call codegen here for both left and right, then look at the operator of the binary expression node to make a decision on what LLVM instruction to generate. The implementations are really the same, inkwell wrapper uses the builder object to call functions to build add, subtract, multiply, and divide instructions just like the tutorial demonstrates. We can use the vastly superior match syntax here instead of the switch statement.

// tutorial implementation

// Value *BinaryExprAST::codegen() {

// Value *L = LHS->codegen();

// Value *R = RHS->codegen();

// if (!L || !R)

// return nullptr;

// switch (Op) {

// case '+':

// return Builder->CreateFAdd(L, R, "addtmp");

// case '-':

// return Builder->CreateFSub(L, R, "subtmp");

// case '*':

// return Builder->CreateFMul(L, R, "multmp");

// case '<':

// L = Builder->CreateFCmpULT(L, R, "cmptmp");

// // Convert bool 0/1 to double 0.0 or 1.0

// return Builder->CreateUIToFP(L, Type::getDoubleTy(TheContext),

// "booltmp");

// default:

// return LogErrorV("invalid binary operator");

// }

// }

impl LLVMCodeGen for BinaryExpr {

fn codegen<'ctx: 'ir, 'ir>(&self, context: &LLVMContext<'ctx>) -> IRGenResult<'ir> {

// Invoke codegen for left and right so that we have LLVM

// values to pass to builder to build an add, subtract,...

let left = self

.left

.codegen(context)

.map(AnyValueEnum::into_float_value)?;

let right = self

.right

.codegen(context)

.map(AnyValueEnum::into_float_value)?;

// What mathmatical operation are we doing?

// Observe the operator of binary expression

// and build the operation with resulting operands

// Result should be an LLVM instruction like fsub, fadd,

// fmult, so on.

let float_res = match self.op {

Ops::Plus => context.builder.build_float_add(

left, right, &"addtmp"

),

Ops::Minus => context.builder.build_float_sub(

left, right, &"subtmp"

),

Ops::Mult => context.builder.build_float_mul(

left, right, &"multmp"

),

Ops::Div => context.builder.build_float_div(

left, right, &"divtmp"

),

};

Ok(float_res

.expect(

"Irrecoverable: LLVM failed to generate insn").as_any_value_enum())

}

}CallExpr is also a non-leaf node in our AST. It has a vector of arguments that are children expressions. We could invoke our function by giving it constants, variables, or even complex expressions (think of the way we call functions with expressions, func(1), func(x), func(2+2)). We need to generate the IR for all these by, again, dynamic dispatch of the AST trait object. We first try to retrieve the function we are calling from the module, propagating an error of undefined function if not present. We validate the arguments given first by counting them. Kaleidoscope has no concept of functions that accept a variety of different arguments (variadic). The parameter count should be the same as the arguments given for the call.\

After this validation, we generate the IR for all the arguments. There is a bit of type conversion I had to to here to convert every LLVM value to a BasicMetadataValueEnum, which is needed to build the call site with Buidler::build_call. The build call function for inkwell accepts a slice of these metadata value objects. See Builder::build_call in inkwell docs.

impl LLVMCodeGen for CallExpr {

fn codegen<'ctx: 'ir, 'ir>(&self, context: &LLVMContext<'ctx>) -> IRGenResult<'ir> {

// First, make sure the function exists to call

let function = context

.module

.get_function(&self.name)

.ok_or(BackendError::UndefinedFunction(self.name.clone()))?;

// Validate argument counts passed to the call

let param_cnt = function.count_params();

if param_cnt != self.args.len() as u32 {

return Err(BackendError::IncorrectNumberOfArgs {

func_name: self.name.clone(),

param_cnt,

});

}

// If all that goes well, we can build a call

// codegen every argument in call expression, collect

// into a vec. Needs to be slice of BasicMetadataValueEnum

let llvm_val_args = self

.args

.iter()

.map(|arg| arg.codegen(context))

.collect::<Result<Vec<_>, BackendError>>()?;

let llvm_val_args: Vec<BasicMetadataValueEnum> = llvm_val_args

.into_iter()

.map(|val| BasicMetadataValueEnum::FloatValue(val.into_float_value()))

.collect();

let call = context

.builder

.build_call(function, llvm_val_args.as_slice(), &"calltmp")

.expect("Irrecoverable: LLVM failed to build call expression");

Ok(call.as_any_value_enum())

}

}Implementing codegen for Function introduces some concepts that may be foreign to some. It starts off by fetching the function from the module, else eagerly evaluating the prototype and adding it there. With the FunctionValue, we then create the first basic block for the function, dubbed entry.

Basic blocks are a compiler construct are basically code sequences with no control flow, save for their entry and exit. They are used in as an abstraction of sorts to make sense of control flow, decision making in code. LLVM API provides both a basic block object and and different functions and methods to operate on them. Here, we create the basic block, then position our cursor for builder at the end of that block. Our language currently does not have control flow, so every function will have a single block, “entry,” that will run from beginning to end. We use this as a cursor of sorts for the builder, so that when generate the body later on, it produces instructions inside the block, filling it up.

Another important part of this piece of code is updating the sym_table in our LLVMContext object. We need to make sure the function scope of variables is resolvable here, so the names are set here.

impl LLVMCodeGen for Function {

fn codegen<'ctx: 'ir, 'ir>(

&self, context: &LLVMContext<'ctx>

) -> IRGenResult<'ir>

{

let fn_val = match context.module.get_function(&self.proto.name) {

Some(fn_val) => fn_val,

None => self.proto.codegen(context)?.into_function_value(),

};

// Easy way I figured out to check if I had already defined

// the function. Check if the entry is already there.

if fn_val.get_first_basic_block().is_some() {

return Err(

BackendError::MultipleFunctionDefs(self.proto.name.clone())

);

}

// Create a basic block, set cursor for builder at end.

let bb_entry = context.context.append_basic_block(fn_val, "entry");

context.builder.position_at_end(bb_entry);

// Update the symbol table with the args names and references

// to their LLVM values, need to resolve them in current function

// scope

context.sym_table.borrow_mut().clear();

for arg in fn_val.get_params() {

// TODO: Change the named value key to a non-owned CStr reference

// so I am not copying and cloning to Rust Strings

let owned_str = arg.into_float_value()

.get_name().to_str().unwrap().to_string();

context

.sym_table

.borrow_mut()

.insert(owned_str, arg.as_any_value_enum());

}

let ir_body = self.body.codegen(context)?;

context

.builder

.build_return(Some(

&ir_body.into_float_value() as &dyn BasicValue)

);

if !fn_val.verify(true) {

return Err(BackendError::FailedToVerifyFunc(self.proto.name.clone()))

}

Ok(fn_val.as_any_value_enum())

}

}Creating Another REPL To Verify the Work Thus Far

To verify the work we’ve done thus far, the tutorial demonstrates the use of a REPL to illustrate the compilation of LLVM IR in real time. I provided a REPL last post as well, so will work off that backbone of that one. This one is found in repl.rs under name llvm_ir_gen_driver.

Some(Token::FuncDef) => match parse_definition(&mut tokens) {

Ok(ast) => {

println!("Parsed a function definition.");

// Attempt to generate code from the AST

// Dumping the contents of compiled LLVM module

// to screen, otherwise printing error.

match ast.codegen(&sesh_ctx) {

Ok(ir) => sesh_ctx.dump_module();

Err(e) => eprintln!("Backend error: {}", e)

}

}

// Failed to parse? just print the error, no AST

// to call codegen on.

Err(err) => {

eprintln!("Frontend Error: {}", err);

_ = tokens.next();

}

},

//...

Some(_top_level_token) => match parse_top_level_expr(&mut tokens) {

Ok(ast) => {

println!("Parsed a top level expression.");

match ast.codegen(&sesh_ctx) {

Ok(ir) => sesh_ctx.dump_module(),

Err(e) => eprintln!("Backend error: {}", e),

}

// We need to delete this top level anonymous function

// afterward, so the REPL can create another when

// we input another top level expression, otherwise

// LLVM will complain that __anonymous_expr is already

// defined

sesh_ctx.delete_top_level_expr();

}

Err(err) => {

eprintln!("Frontend Error: {}", err);

_ = tokens.next();

}

},kaleidrs$ cargo b

Compiling kaleidrs v0.1.0 (/home/jdorman/projects/langs-test/kaleidrs)

Finished `dev` profile [unoptimized + debuginfo] target(s) in 1.20s

kaleidrs$ cargo r

Finished `dev` profile [unoptimized + debuginfo] target(s) in 0.02s

Running `target/debug/kaleidrs`

Ready >> 2 + 2;

Parsed a top level expression.

; ModuleID = 'kaleidrs_module'

source_filename = "kaleidrs_module"

define double @__anonymous_expr() {

entry:

ret double 4.000000e+00

}

Ready >> (60*2) / 20;

Parsed a top level expression.

; ModuleID = 'kaleidrs_module'

source_filename = "kaleidrs_module"

define double @__anonymous_expr() {

entry:

ret double 6.000000e+00

}

Ready >> def dub(num) num*2;

Parsed a function definition.

; ModuleID = 'kaleidrs_module'

source_filename = "kaleidrs_module"

define double @dub(double %num) {

entry:

%multmp = fmul double %num, 2.000000e+00

ret double %multmp

}

Ready >> dub(400.2) - 200;

Parsed a top level expression.

; ModuleID = 'kaleidrs_module'

source_filename = "kaleidrs_module"

define double @dub(double %num) {

entry:

%multmp = fmul double %num, 2.000000e+00

ret double %multmp

}

define double @__anonymous_expr() {

entry:

%calltmp = call double @dub(double 4.002000e+02)

%subtmp = fsub double %calltmp, 2.000000e+02

ret double %subtmp

}

Ready >> dub();

Parsed a top level expression.

Backend error: Incorrect number of arguments passed to dub, expected 1

Ready >> some_var - 3;

Parsed a top level expression.

Backend error: Unknown variable name some_varFrom IR, Where To?

With IR in hand, the path forward from here becomes a choose-your-own-adventure of sorts. We can take it and perform optimizations on it with the LLVM optimization suite, perform analyses on it, perform compilation to produce static object code for a particular target, or even JIT compile it to run the IR in real time. The IR is the lingua franca of sorts to all of the compiler infrastructure, so the world is your oyster.

The tutorial goes on to do optimizations and JIT in the next chapter, so this series of blog posts will follow that. Stayed tuned…

Leave a Reply